Bandit

Bird Banding Software Task Analysis

ABOUT

Bird banders throughout North America record their banding data into the Bandit software developed by the U.S. Geological Survey's Bird Banding Lab (BBL). This data is used to aid in monitoring the population ecology of bird species. Bandit is a comprehensive tool that covers nearly any scenario that a bander could possibly encounter in their field research.

My teammate and I conducted task-based usability testing on four users of Bandit to better understand their behavior and challenges in the current software, and what they would like in a web-based tool. Using what we learned from conducting contextual inquiries, task analysis sessions, and an eye-tracking session, we presented our findings and recommendations to the client. Deliverables for this projected included a presentation, printed and bound task analysis, and a short video highlighting user responses during contextual inquiry.

PROCESS

CONTEXTUAL INQUIRY

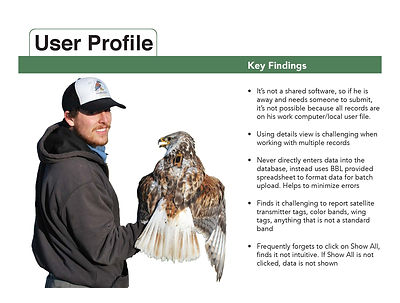

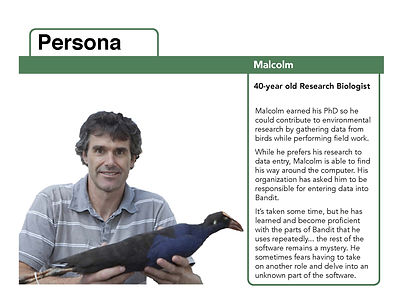

We conducted three field interviews to explore how current users interacted with BBL's Bandit software. Rather than presenting these users with specific tasks, we asked them to walk us through their normal method for entering data. Users were asked to talk us through each step, letting us know which parts of the interface really worked, venting when things went wrong, and giving suggestions if they had thoughts about what might be useful for them. From these interviews we developed a user profile (based off a single user), user matrix (comparison of users), and a user persona (combination of users).

TASK ANALYSIS

After gaining an understanding of how users perform their daily tasks in Bandit, we moved forward with conducting further investigation of how users would perform being given specific tasks to complete. The BBL developed specific scenarios, based on common errors, to engage our test participants. To eliminate the chances of prior exploration, our users were given the scenarios as close to the time of testing and were asked to work through each scenario while thinking aloud. The aim was to complete five tasks within one hour. We were able to invite one local user to the University of Baltimore's User Research Lab to perform the tasks using Tobii eye-tracking equipment.

Sample of Scenarios

Scenario #1 needs to be done FIRST as it sets up the testing environment for all five of the scenarios. Other scenarios should be done in different orders for different banders because not all banders may be able to complete all five scenarios in the time allowed, but each student group should see at least one example of Scenarios #2 - #5. Note that Scenario #3 can only be completed once the bander has completed Scenario #2.

Scenario #1 (ALL BANDERS DO THIS TEST FIRST)

You have been requested by the U.S. Fish and Wildlife Service to band a breeding pair of Red-tailed Hawks that were recently relocated to a nearby conservation area near Hayes, Kansas where you have never banded birds before. First please create the band inventory and add the new location. After completing the inventory and new location, input the banding data and resolve any error message that appears.

Supporting files:

-

Description of band span

-

Description of location

-

Datasheet for scenario 1

Scenario #2

At the same conservation area near Hayes, Kansas, you have captured and banded five Red-tailed Hawks. You have tagged each of them with a federal band, wing tag (red tag with white codes A01 to A05) and color leg band (blue anodized bands with silver codes X28 to X32). Go through the process of inputting the band and auxiliary marker data into Bandit and resolve any error message that appears.

Supporting files:

-

Datasheet for scenario 2

Scenario #3 (must be completed AFTER Scenario #2)

At the same conservation area near Hayes, Kansas, you have recaptured a Red-tailed Hawk with the following markers (red wing tag with white code A03 and blue anodized leg band with silver code X30). Using Bandit, submit the data for a recaptured bird.

Supporting files:

-

Datasheet for scenario 3

time to task analysis

collecting eye-tracking data using Tobii

RESULTS

USABILITY REPORT

With our research completed, my partner and I examined the results and developed recommendations based upon user needs and the client's priorities and concerns. The resulting presentation and report included a description of methodology, key findings and recommendations, additional/secondary findings to consider, and user profiles. Key findings and recommendations included:

Interface and Navigation are not intuitive

Improve user experience and access by updating the interface design to meet compliance requirements for Section 508.

-

decrease user fatigue by limiting navigation options (image below illustrates the number of distinct navigation choices on one page)

-

make the navigation panel visually distinctive by strengthening contrast or using a color other than gray

-

amount of technical jargon should be decreased and replaced with plain language if possible

-

ensure buttons look like buttons

Reference Tab and Help are overlooked

Most users were not aware of the Help and Reference sections. Some will email the BBL help desk, but other users admitted to knowingly submitting erroneous data.

-

have on-page tutorials for specific functions (an example of a pop up help feature is shown below)

-

use a filtering feature to help users narrow down status code choices

-

ensure Help and Reference sections are prominently displayed and not lost in the navigation

Too many fields in Bandit

Bandit is comprehensive and covers nearly any scenario a bander may encounter. This does become problematic for the majority of users who generally perform only basic banding data entry. Eye-tracking software was able to illustrate (below) our user's difficulty in finding the fields they needed to perform their task.

-

remove table view, instead create entry points based on user goal or function

-

organize by level; introduce Bandit "lite" for basic banding data; add advanced levels

-

introduce different entry points

Code frustrations in Bandit

Similar to too many fields, there are too many codes in the pull-down lists that most banders do not use. Codes do not make sense to banders (e.g. "LP" represents Molt Limit Present) and the order in which they are listed is not logical.

-

place commonly used codes at top of dropdown; or, include only common codes and move all others to a special cases dropdown

-

enable users to make and use favorites

Time Intensive

When a user has a large quantity of bands or locations to enter, Bandit takes a very long time to load as the screen is scrolled vertically or horizontally.

-

allow easier uploading of data (i.e. upload a .txt file directly into Band Inventory)

-

use a powerful database management system to allow for quicker loading/uploading

-

autofill applicable information for any band number